Where going to get your computer to the next level. From what would be the best hardware to get the highest possible frame rate in that newly launched game? What CPU will be the best fit for you for your multi tasking needs to what type of cooling you will need to keep your computer running cool all night long . All about high end computer rigs is what we are about

Google Search

Thursday, December 22, 2011

Friday, December 16, 2011

Intel Eliminating Popular CPU'S

Intel has notified its partners to prepare for a significant change in desktop processor supply in 2012, which clears the portfolio to accommodate the Sandy Bridge-E processors as well as the upcoming 22 nm product generation.

Zoom

Zoom

Intel is set to phase out the Core i7-930, i7-950, i7-960, i7-980 and i7-990X on the LGA 1366 platform to its end-of-life cycle. The LGA 1366 platform was first introduced in November of 2008. It represented Intel's high-end desktop platform. To this day, this platform continues to hold its own in performance against to today's top processors (Bulldozer, Sandy Bridge and Sandy Bridge-E). The LGA 1366 was replaced by the LGA 2011 (Sandy Bridge-E) on November 14, 2011.

Intel is set to send the Core i3-540, i5-650, i5-660, i5-670, i5-680, i7-860 and i7-870, along with the Pentium G6950 and G6960 on the LGA 1156 platform to its end-of-life cycle. LGA 1156 was first introduced in September of 2009. It represented Intel's mainstream desktop platform. The LGA 1156 platform was short-lived with its replacement by the LGA 1155 (Sandy Bridge) in January of 2011.

The LGA 1366 and LGA 1156 boxed parts will be available for order until June 29, 2012. Tray SKUs will be available until December 7, 2012 or until supplies are depleted.by doug crothers

Zoom

ZoomIntel is set to phase out the Core i7-930, i7-950, i7-960, i7-980 and i7-990X on the LGA 1366 platform to its end-of-life cycle. The LGA 1366 platform was first introduced in November of 2008. It represented Intel's high-end desktop platform. To this day, this platform continues to hold its own in performance against to today's top processors (Bulldozer, Sandy Bridge and Sandy Bridge-E). The LGA 1366 was replaced by the LGA 2011 (Sandy Bridge-E) on November 14, 2011.

Intel is set to send the Core i3-540, i5-650, i5-660, i5-670, i5-680, i7-860 and i7-870, along with the Pentium G6950 and G6960 on the LGA 1156 platform to its end-of-life cycle. LGA 1156 was first introduced in September of 2009. It represented Intel's mainstream desktop platform. The LGA 1156 platform was short-lived with its replacement by the LGA 1155 (Sandy Bridge) in January of 2011.

The LGA 1366 and LGA 1156 boxed parts will be available for order until June 29, 2012. Tray SKUs will be available until December 7, 2012 or until supplies are depleted.by doug crothers

Monday, December 5, 2011

Benchmarks Results Battlefield 3 Nvidia Cards

Nvidia-based charts, including 11 different cards at three resolutions. Note that the GeForce GTX 400- and 500-series cards are DirectX 11-capable, and the 200-series boards are limited to DirectX 10. This is an important distinction because, even though the 200s throw up some reasonable performance numbers (especially the GTX 295), they’re not doing as much work. The game automatically dials Terrain Quality down from High to Low, yielding some pretty nasty artifacts as shadows interact with the environment.

Those triangles should be smooth shadows.

Those triangles should be smooth shadows.

Hopefully Nvidia can fix this in its drivers. For now, those older Nvidia cards don't do Battlefield 3 any favors; stick to the newer stuff (or go the AMD route—incidentally, AMD’s DX 10 cards don’t have this problem).

Update: Nvidia let us know that the issue we encountered is caused by a shadow corruption bug in its driver, which should be fixed in the next release. The company says it hopes to have that build available within a couple of weeks.

Mentally filtering out the 200-series boards, the rest of Nvidia’s armada scales down fairly evenly. At 1680x1050 (sticking to High quality settings), I’d want at least a GeForce GTX 560 Ti.

The same card would probably suffice at 1920x1080 too, though a GTX 570 would be even better.

Owners of 30” displays already threw down some big bucks for 2560x1600; a GeForce GTX 590 is the right two-slot solution there. If you’re willing to go SLI, two GeForce GTX 570s perform better (and cost less), as we’ll see in a few pages.by chris angelini

Hopefully Nvidia can fix this in its drivers. For now, those older Nvidia cards don't do Battlefield 3 any favors; stick to the newer stuff (or go the AMD route—incidentally, AMD’s DX 10 cards don’t have this problem).

Update: Nvidia let us know that the issue we encountered is caused by a shadow corruption bug in its driver, which should be fixed in the next release. The company says it hopes to have that build available within a couple of weeks.

Mentally filtering out the 200-series boards, the rest of Nvidia’s armada scales down fairly evenly. At 1680x1050 (sticking to High quality settings), I’d want at least a GeForce GTX 560 Ti.

The same card would probably suffice at 1920x1080 too, though a GTX 570 would be even better.

Owners of 30” displays already threw down some big bucks for 2560x1600; a GeForce GTX 590 is the right two-slot solution there. If you’re willing to go SLI, two GeForce GTX 570s perform better (and cost less), as we’ll see in a few pages.by chris angelini

Wednesday, November 30, 2011

AMD 8 Core CPU

AMD FX line of processors today, and two gaming hardware builders have already jumped at the chance to provide the world's first gaming rig powered by a native 8-core desktop processor.

Utilizing AMD's Bulldozer multi-core architecture, the new FX series of AMD CPUs sets its sights on high-end PC gaming, with up to eight completely unlocked native cores ready to tackling the toughest in HD multimedia creation and multi-display PC gaming at a relatively low price.

At least that's what the marketing materials tell us. It all sounds good on paper, but the FX chips aren't quite the new power revolution they were expected to be.

That won't stop high-end PC gaming machine makers from jumping on the FX bandwagon. Both Origin PC and CyberpowerPC sent out announcements this morning, letting the world know that they were ready to build systems featuring the new four, six, and eight core chips.

CyberpowerPC's Gamer Scorpius line of gaming PCs (pictured above because they sent pictures) start at $576 and feature all four of the new chip models: The AMD FX-8150 3.60 GHz 8-Core CPU; AMD FX-8120 3.10 GHz 8-Core CPU; AMD FX-6100 3.30 GHz 6-Core CPU; and the AMD FX-4100 3.6 GHz Quad-Core CPU.

Origin PC will also be featuring all four chips in its systems, and while they don't do dedicated product lines they do offer quotes.

"Origin PC works directly with the best companies in the industry to deliver our customers with the latest technology first" said Kevin Wasielewski Origin PC CEO and cofounder. "AMD's new unlocked 8-core processors deliver the best price to performance value that we have ever seen."

Of course these aren't the only companies employing the new processor line; they're just the ones that showed up in my inbox this morning. I'm sure every gaming hardware manufacturer will be tossing these things about like popcorn by the end of the day.

I'll probably be sticking with my Intel Core i7 for another couple of years. It's served me well so far, and I've not had an AMD-powered desktop since the turn of the millennium.by kotaku

Utilizing AMD's Bulldozer multi-core architecture, the new FX series of AMD CPUs sets its sights on high-end PC gaming, with up to eight completely unlocked native cores ready to tackling the toughest in HD multimedia creation and multi-display PC gaming at a relatively low price.

At least that's what the marketing materials tell us. It all sounds good on paper, but the FX chips aren't quite the new power revolution they were expected to be.

That won't stop high-end PC gaming machine makers from jumping on the FX bandwagon. Both Origin PC and CyberpowerPC sent out announcements this morning, letting the world know that they were ready to build systems featuring the new four, six, and eight core chips.

CyberpowerPC's Gamer Scorpius line of gaming PCs (pictured above because they sent pictures) start at $576 and feature all four of the new chip models: The AMD FX-8150 3.60 GHz 8-Core CPU; AMD FX-8120 3.10 GHz 8-Core CPU; AMD FX-6100 3.30 GHz 6-Core CPU; and the AMD FX-4100 3.6 GHz Quad-Core CPU.

Origin PC will also be featuring all four chips in its systems, and while they don't do dedicated product lines they do offer quotes.

"Origin PC works directly with the best companies in the industry to deliver our customers with the latest technology first" said Kevin Wasielewski Origin PC CEO and cofounder. "AMD's new unlocked 8-core processors deliver the best price to performance value that we have ever seen."

Of course these aren't the only companies employing the new processor line; they're just the ones that showed up in my inbox this morning. I'm sure every gaming hardware manufacturer will be tossing these things about like popcorn by the end of the day.

I'll probably be sticking with my Intel Core i7 for another couple of years. It's served me well so far, and I've not had an AMD-powered desktop since the turn of the millennium.by kotaku

Sunday, November 27, 2011

Big Loss For RAMBUS Case

few companies in the tech world as infamous as Rambus, an IP-only RAM development firm. For the better part of 10 years now they have been engaged in court cases with virtually every RAM and x86 chipset manufacturer around over the violation of their patents. Through a long series of events SDRAM did end up implementing RAMBUS technologies, and the American courts have generally upheld the view that in spite of everything that happened while Rambus was a member of the JEDEC trade group that their patents and claims against other manufacturers for infringement are legitimate. At this point most companies using SDRAM have settled with Rambus on these matters.

Since then Rambus has moved on to a new round of lawsuits, focusing on the aftermath of Rambus’s disastrous attempt to get RDRAM adopted as the standard RAM technology for computers. Rambus has long held that they did not fail for market reasons, but rather because of collusion and widespread price fixing by RAM manufacturers, who purposely wanted to drive Rambus out of the market in favor of their SDRAM businesses. The price fixing issue was investigated by the Department of Justice – who found the RAM manufacturers guilty – which in turn Rambus is using in their suits as further proof of collusion against Rambus.

The biggest of these suits was filed in 2004 against the quartet of Samsung, Infineon, Hynix, and Micron. In 2005 Infineon settled with Rambus for $150 million while in 2010 Samsung settled with Rambus for $900 million, leaving just Hynix and Micron to defend. That suit finally went to court in 2011, with Rambus claiming that collusion resulted in them losing $4 billion in sales, which as a result of California’s treble damage policy potentially put Hynix and Micron on the line for just shy of $12 billion in damages.

Today a verdict was finally announced in the case, and it was against Rambus. A 12 member jury found in a 9-3 vote that Hynix and Micron did not conspire against Rambus, effectively refuting the idea that RAM manufacturers were responsible for Rambus’s market failure. As Rambus’s business relies primarily on litigation – their own RAM designs bring in relatively little due to the limited use of RDRAM and XDR – this is a significant blow for the company.

Rambus can of course file an appeal, as they have done in the past when they’ve lost cases, but the consensus is that Rambus is extremely unlikely to win such an appeal. If that’s the case this could mean that this is the beginning of a significant shift in business practices for Rambus, as while they have other outstanding cases – most notably against NVIDIA – the anti-trust suit was the largest and most important of them. Not surprising their stock also took a heavy hit as a result, as it ended the day down 60%. Rambus winning the anti-trust suit had long been factored into the stock price, so the loss significantly reduced the perceived value of the company.by ryan smith

Since then Rambus has moved on to a new round of lawsuits, focusing on the aftermath of Rambus’s disastrous attempt to get RDRAM adopted as the standard RAM technology for computers. Rambus has long held that they did not fail for market reasons, but rather because of collusion and widespread price fixing by RAM manufacturers, who purposely wanted to drive Rambus out of the market in favor of their SDRAM businesses. The price fixing issue was investigated by the Department of Justice – who found the RAM manufacturers guilty – which in turn Rambus is using in their suits as further proof of collusion against Rambus.

The biggest of these suits was filed in 2004 against the quartet of Samsung, Infineon, Hynix, and Micron. In 2005 Infineon settled with Rambus for $150 million while in 2010 Samsung settled with Rambus for $900 million, leaving just Hynix and Micron to defend. That suit finally went to court in 2011, with Rambus claiming that collusion resulted in them losing $4 billion in sales, which as a result of California’s treble damage policy potentially put Hynix and Micron on the line for just shy of $12 billion in damages.

Today a verdict was finally announced in the case, and it was against Rambus. A 12 member jury found in a 9-3 vote that Hynix and Micron did not conspire against Rambus, effectively refuting the idea that RAM manufacturers were responsible for Rambus’s market failure. As Rambus’s business relies primarily on litigation – their own RAM designs bring in relatively little due to the limited use of RDRAM and XDR – this is a significant blow for the company.

Rambus can of course file an appeal, as they have done in the past when they’ve lost cases, but the consensus is that Rambus is extremely unlikely to win such an appeal. If that’s the case this could mean that this is the beginning of a significant shift in business practices for Rambus, as while they have other outstanding cases – most notably against NVIDIA – the anti-trust suit was the largest and most important of them. Not surprising their stock also took a heavy hit as a result, as it ended the day down 60%. Rambus winning the anti-trust suit had long been factored into the stock price, so the loss significantly reduced the perceived value of the company.by ryan smith

Tuesday, November 22, 2011

Intel Core i7-3960X Extreme Edition

Intel's Sandy Bridge architecture stole the limelight this year, summarily trouncing its predecessors--and the best that AMD had to offer--with considerable performance boosts and power savings. Today Intel is announcing the Sandy Bridge Extreme Edition: a $990 CPU that distills the lessons the company has learned over the past year into a single piece of premium silicon.

It's a time-honored tradition: Take all the improvements from the most recent architecture shift, and drop them into an unlocked processor aimed at overclockers and workstations with considerable computational workloads.

Sitting at the top of the Sandy Bridge Extreme Edition lineup is the 3.3GHz Core i7-3960X. Here are the specs for it and the other two newcomers (the Core i7-3930K and the Core i7-3820), in handy chart form.

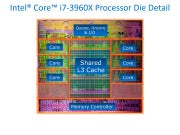

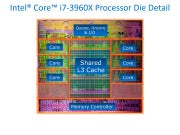

Core i7-3960X up close.The new processors are built on the Sandy Bridge architecture, and the fundamentals haven't changed. Sandy Bridge Extreme Edition offers two CPUs with six cores, and one CPU with four cores. The Core i7-3960X I examined offers 15MB of L3 cache shared between the cores--up from 12MB in last year's variant, or from 8MB in the Core i7-2600K. That larger L3 cache permits quicker data exchanges among the cores, which improves performance in applications that are optimized for multiple cores.

Core i7-3960X up close.The new processors are built on the Sandy Bridge architecture, and the fundamentals haven't changed. Sandy Bridge Extreme Edition offers two CPUs with six cores, and one CPU with four cores. The Core i7-3960X I examined offers 15MB of L3 cache shared between the cores--up from 12MB in last year's variant, or from 8MB in the Core i7-2600K. That larger L3 cache permits quicker data exchanges among the cores, which improves performance in applications that are optimized for multiple cores.

With new processors comes the new X79 chipset, and Socket 2011. Yes, a brand-new socket; the sound you hear is a legion of serial upgraders howling with rage. For them, the new socket means having to spring for new motherboard, but the news it isn't entirely bad. When I met with Intel representatives, I was assured that the performance-oriented Socket 2011 will be with us for a few years -- at least until an Extreme Edition of the 22-nanometer Ivy Bridge architecture makes the rounds.

For the frugal enthusiast, picking up a Core i7-3820 CPU is an intriguing option. That CPU is likely to be priced competitively with the existing Core i7-2600K--in the neighborhood of $300. Grab one of them when they're available, save your pennies, and you'll be ready to dive into whatever 22nm-based Extreme Edition goodness Intel comes up with a year from now.

Intel parked its test Core i7-3960X CPU on a DX79SI "Siler" motherboard. The Siler is well equipped, to say the least. Eight DIMM slots offer a potential 64GB of RAM, with four DIMMs arranged on each side of the processor. This could pose a problem for some larger CPU fans. For my tests Intel provided an Asetek liquid cooling kit, but plenty of alternative cooling systems and motherboards supporting Socket 2011 will undoubtedly be available at or near launch.

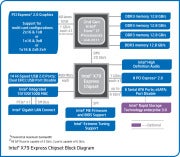

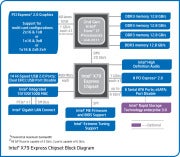

The X79 chipset.Two USB 3.0 ports occupy the rear of the motherboard, with onboard connectors providing another pair for your case. The Siler supports a total of 14 USB ports--six on the rear, and eight on three onboard headers--with room for two FireWire ports as well. The available SATA channels are fairly standard: two 6.0GB/s SATA ports, four 3.0GB/s SATA ports.

The X79 chipset.Two USB 3.0 ports occupy the rear of the motherboard, with onboard connectors providing another pair for your case. The Siler supports a total of 14 USB ports--six on the rear, and eight on three onboard headers--with room for two FireWire ports as well. The available SATA channels are fairly standard: two 6.0GB/s SATA ports, four 3.0GB/s SATA ports.

For expansion the motherboard offers three PCI Express 3.0 x16 connectors for triple-SLI and Crossfire graphics card configurations, plus a few PCI Express and PCI connectors. The board also provides dual gigabit ethernet jacks, and an IR transmitter/receiver.

Turbo Boost returns, with some improvements. This is how it works: Processors have heat and power thresholds beyond which they become unstable and shut down, or worse. But since processors don't always hit the peak of their thermal design power (TDP), there is some headroom for overclocking.

Turbo Boost (and AMD's variant, dubbed Turbo Core) take advantage of that performance gap, boosting the performance of the operating cores until the TDP is reached, or until the task at hand is done. In the Core i7-3960X, Turbo Boost means a bump of 300MHz per core when five to six cores are active, and a bump of 600MHz per core when only one or two cores are active.

I paired the Core i7-3960X with the Radeon HD 6990 graphics card, 8GB of RAM, and a 1TB hard drive. To get an idea of where SandyBridge E sits in the grand scheme of things, I tested it against the 3.46GHz Core i7-990X, last year's Extreme Edition processor. For mere mortals there's the 3.4GHz Core i7-2600K, the cream of the current Sandy Bridge crop. With the exception of their motherboards our testbeds were identical, and all tests were performed on systems attached to a 30-inch display.

Testing: Gaming

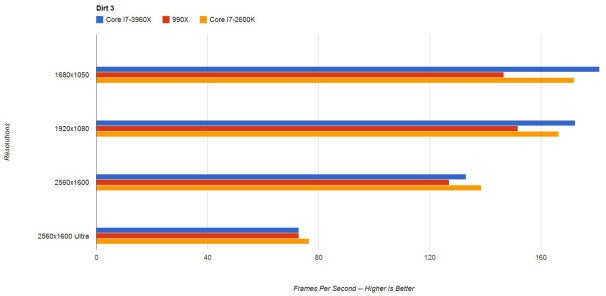

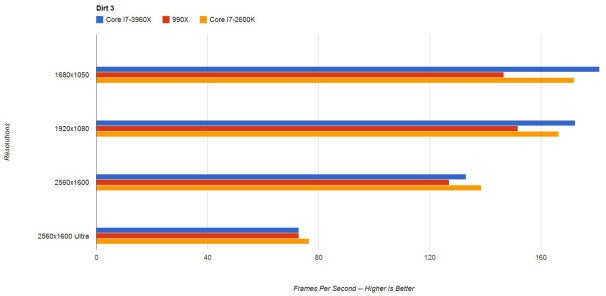

Of the games we used in our testing was Codemasters' Dirt 3, the latest entry in the software maker's rally racing series. Dirt 3 has everything we want in a DirectX 11 game: gorgeous visuals, frenetic pacing, and lots of knobs and levers to fiddle with, to make the most of the hardware we have on hand. here are our test results for this game.

Dirt 3 test results.

Dirt 3 test results.

At resolutions of 1680 by 1050 pixels and 1920 by 1080 pixels, the Core i7-3960X finished at the top of the heap, followed by the Core i7-2600K in second place, and the Core i7-990X in third. This is exactly what we expected. Applications that are designed to scale across a plethora of cores will show better results with six-core processors, as will future game engines (as we saw in the Unigine Heaven benchmark). But the most advanced games available today may not take full advantage of the extra headroom yet. And at these resolutions and settings, we're CPU-bound: We've squeezed out just about all the performance we can from the Radeon HD 6990, leaving the games to eke out as much power as they can draw from the processors.

At 2560-by-1600-pixel resolution, the Core i7-2600K actually climbed ahead of the Core i7-3690X by 5 frames per second, with 138.5 frames per second versus 133.1 fps. At the Ultra setting, the Core i7-2600K netted 76.5 frames per second, against the Core i7-3690X's 72.8.

The difference is negligible. Bear in mind that these tests run at stock speeds; the Core i7-3960X is an unlocked processor that's born and bred for overclocking, and those 4 to 5 frames per second will melt away once the CPU gets pushed beyond its meager 3.3GHz (or 3.9GHz, with Turbo Boost).

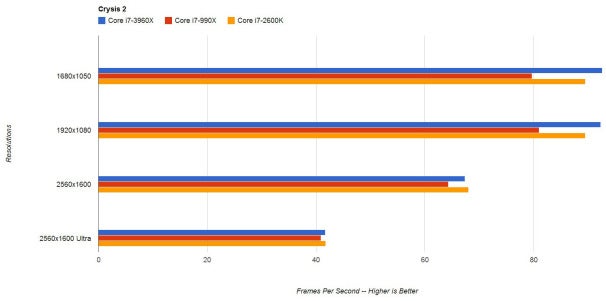

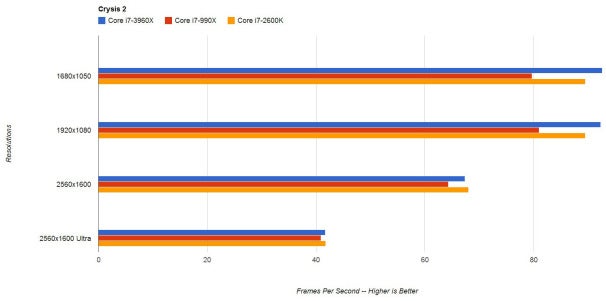

Crytek's Crysis 2 is a decidedly more strenuous game than Dirt 3, but in running it we're still CPU-bound. Here are our Crysis 2 results.

Crysis 2 test results.

Crysis 2 test results.

At 2560 by 1600 pixels, the Core i7-3690X maintained a frame rate of 67.4 fps, while the Core i7-2600K delivered 68.0 fps. With the settings ratcheted up another notch to Ultra, the Core i7-3690X netted 41.7 fps, to the Core i7-2600K's 41.8 fps.

When I put Sandy Bridge to the test earlier this year, I was especially impressed by Intel's Quick Sync technology. Quick Sync was designed to speed up video transcoding efforts without requiring a discrete graphics card, and it works well -- but it doesn't show up on Intel's higher-end wares. The company's rationale is that, if you're buying a $300 (or $1000) processor, you probably plan to pair it with a proper graphics card.

I converted a 1080p version of Big Buck Bunny to an iPad friendly 720p, using Arcsoft's Media Converter 7 software. As Quick Sync wasn't available, I went with AMD Stream hardware acceleration technology, built for AMD's graphics cards. The results: The Core i7-3960X transcoded the video in an average of 2 minutes, 13 seconds, while the Core i7-990X took 3 minutes, 12 seconds, and the Core i7-2600K took 2 minutes, 17 seconds.

Usually, with great performance comes great power consumption. That said, though the Core i7-3960X sports a 130W TDP, those specs don't represent too much of a leap over the Core i7-2600K and its 95W TDP.

When sitting idle, the Core i7-3960X drew 112 watts of power, the Core i7-990X drew 119 watts, and the Core i7-2600K drew 93.6 watts. That works out to a 20 percent jump over the 2600K for the 3960X--considerable, but hardly beyond the pale. The greater offender against power efficiency here is the Core i7-990X, but again this result just goes to show Sandy Bridge's improved power savings.

Power consumption while under load maintained roughly the same pattern for the three CPUs. Over the course of a strenuous workload, the Core i7-3690X used 5 percent less power than the Core i7-990X, and 24 percent more power than the Core i7-2600K.

The Core i7-3960X is a worthy successor to last year's Extreme Edition processors, but the same caveats apply to it as to them. You'll see the greatest benefit in programs that are heavily threaded--computation-heavy spreadsheets, video encoding applications like Sony Vegas Pro, and 3D rendering applications like Maxon Cinema 4D, for example.

On the gaming front, whether you use Intel's excellent overclocking assistant or muck about in the BIOS, you'll be able to cobble together a nauseatingly potent performance machine. And if a $1000 CPU is within your gaming budget, you can't go wrong. This processor is poised to be the foundation of many of 2012's performance desktop juggernauts, and a few early efforts that have already trickled into the lab are sure to make waves. But if you aren't overclocking--or looking to get some gaming done--you don't need this much power.

Ivy Bridge does throw a wrench into the works. At the moment, we know very little about the platform: It'll be shrunk down to the 22nm processor, which should cut power consumption a bit.

Is Sandy Bridge E worth it? Even at $1000, the answer is a resounding yes--if you're using the right apps, are a dedicated overclocker, or have barrels of cash that you simply can't spend fast enough.by nate ralph,pc world

It's a time-honored tradition: Take all the improvements from the most recent architecture shift, and drop them into an unlocked processor aimed at overclockers and workstations with considerable computational workloads.

Sitting at the top of the Sandy Bridge Extreme Edition lineup is the 3.3GHz Core i7-3960X. Here are the specs for it and the other two newcomers (the Core i7-3930K and the Core i7-3820), in handy chart form.

Core i7-3960X up close.The new processors are built on the Sandy Bridge architecture, and the fundamentals haven't changed. Sandy Bridge Extreme Edition offers two CPUs with six cores, and one CPU with four cores. The Core i7-3960X I examined offers 15MB of L3 cache shared between the cores--up from 12MB in last year's variant, or from 8MB in the Core i7-2600K. That larger L3 cache permits quicker data exchanges among the cores, which improves performance in applications that are optimized for multiple cores.

Core i7-3960X up close.The new processors are built on the Sandy Bridge architecture, and the fundamentals haven't changed. Sandy Bridge Extreme Edition offers two CPUs with six cores, and one CPU with four cores. The Core i7-3960X I examined offers 15MB of L3 cache shared between the cores--up from 12MB in last year's variant, or from 8MB in the Core i7-2600K. That larger L3 cache permits quicker data exchanges among the cores, which improves performance in applications that are optimized for multiple cores.With new processors comes the new X79 chipset, and Socket 2011. Yes, a brand-new socket; the sound you hear is a legion of serial upgraders howling with rage. For them, the new socket means having to spring for new motherboard, but the news it isn't entirely bad. When I met with Intel representatives, I was assured that the performance-oriented Socket 2011 will be with us for a few years -- at least until an Extreme Edition of the 22-nanometer Ivy Bridge architecture makes the rounds.

For the frugal enthusiast, picking up a Core i7-3820 CPU is an intriguing option. That CPU is likely to be priced competitively with the existing Core i7-2600K--in the neighborhood of $300. Grab one of them when they're available, save your pennies, and you'll be ready to dive into whatever 22nm-based Extreme Edition goodness Intel comes up with a year from now.

What's New?

Intel parked its test Core i7-3960X CPU on a DX79SI "Siler" motherboard. The Siler is well equipped, to say the least. Eight DIMM slots offer a potential 64GB of RAM, with four DIMMs arranged on each side of the processor. This could pose a problem for some larger CPU fans. For my tests Intel provided an Asetek liquid cooling kit, but plenty of alternative cooling systems and motherboards supporting Socket 2011 will undoubtedly be available at or near launch.

The X79 chipset.Two USB 3.0 ports occupy the rear of the motherboard, with onboard connectors providing another pair for your case. The Siler supports a total of 14 USB ports--six on the rear, and eight on three onboard headers--with room for two FireWire ports as well. The available SATA channels are fairly standard: two 6.0GB/s SATA ports, four 3.0GB/s SATA ports.

The X79 chipset.Two USB 3.0 ports occupy the rear of the motherboard, with onboard connectors providing another pair for your case. The Siler supports a total of 14 USB ports--six on the rear, and eight on three onboard headers--with room for two FireWire ports as well. The available SATA channels are fairly standard: two 6.0GB/s SATA ports, four 3.0GB/s SATA ports.For expansion the motherboard offers three PCI Express 3.0 x16 connectors for triple-SLI and Crossfire graphics card configurations, plus a few PCI Express and PCI connectors. The board also provides dual gigabit ethernet jacks, and an IR transmitter/receiver.

Turbo Boost returns, with some improvements. This is how it works: Processors have heat and power thresholds beyond which they become unstable and shut down, or worse. But since processors don't always hit the peak of their thermal design power (TDP), there is some headroom for overclocking.

Turbo Boost (and AMD's variant, dubbed Turbo Core) take advantage of that performance gap, boosting the performance of the operating cores until the TDP is reached, or until the task at hand is done. In the Core i7-3960X, Turbo Boost means a bump of 300MHz per core when five to six cores are active, and a bump of 600MHz per core when only one or two cores are active.

I paired the Core i7-3960X with the Radeon HD 6990 graphics card, 8GB of RAM, and a 1TB hard drive. To get an idea of where SandyBridge E sits in the grand scheme of things, I tested it against the 3.46GHz Core i7-990X, last year's Extreme Edition processor. For mere mortals there's the 3.4GHz Core i7-2600K, the cream of the current Sandy Bridge crop. With the exception of their motherboards our testbeds were identical, and all tests were performed on systems attached to a 30-inch display.

Testing: Gaming

Of the games we used in our testing was Codemasters' Dirt 3, the latest entry in the software maker's rally racing series. Dirt 3 has everything we want in a DirectX 11 game: gorgeous visuals, frenetic pacing, and lots of knobs and levers to fiddle with, to make the most of the hardware we have on hand. here are our test results for this game.

Dirt 3 test results.

Dirt 3 test results.At resolutions of 1680 by 1050 pixels and 1920 by 1080 pixels, the Core i7-3960X finished at the top of the heap, followed by the Core i7-2600K in second place, and the Core i7-990X in third. This is exactly what we expected. Applications that are designed to scale across a plethora of cores will show better results with six-core processors, as will future game engines (as we saw in the Unigine Heaven benchmark). But the most advanced games available today may not take full advantage of the extra headroom yet. And at these resolutions and settings, we're CPU-bound: We've squeezed out just about all the performance we can from the Radeon HD 6990, leaving the games to eke out as much power as they can draw from the processors.

At 2560-by-1600-pixel resolution, the Core i7-2600K actually climbed ahead of the Core i7-3690X by 5 frames per second, with 138.5 frames per second versus 133.1 fps. At the Ultra setting, the Core i7-2600K netted 76.5 frames per second, against the Core i7-3690X's 72.8.

The difference is negligible. Bear in mind that these tests run at stock speeds; the Core i7-3960X is an unlocked processor that's born and bred for overclocking, and those 4 to 5 frames per second will melt away once the CPU gets pushed beyond its meager 3.3GHz (or 3.9GHz, with Turbo Boost).

Crytek's Crysis 2 is a decidedly more strenuous game than Dirt 3, but in running it we're still CPU-bound. Here are our Crysis 2 results.

Crysis 2 test results.

Crysis 2 test results.At 2560 by 1600 pixels, the Core i7-3690X maintained a frame rate of 67.4 fps, while the Core i7-2600K delivered 68.0 fps. With the settings ratcheted up another notch to Ultra, the Core i7-3690X netted 41.7 fps, to the Core i7-2600K's 41.8 fps.

Testing: Media and Power

When I put Sandy Bridge to the test earlier this year, I was especially impressed by Intel's Quick Sync technology. Quick Sync was designed to speed up video transcoding efforts without requiring a discrete graphics card, and it works well -- but it doesn't show up on Intel's higher-end wares. The company's rationale is that, if you're buying a $300 (or $1000) processor, you probably plan to pair it with a proper graphics card.

I converted a 1080p version of Big Buck Bunny to an iPad friendly 720p, using Arcsoft's Media Converter 7 software. As Quick Sync wasn't available, I went with AMD Stream hardware acceleration technology, built for AMD's graphics cards. The results: The Core i7-3960X transcoded the video in an average of 2 minutes, 13 seconds, while the Core i7-990X took 3 minutes, 12 seconds, and the Core i7-2600K took 2 minutes, 17 seconds.

Usually, with great performance comes great power consumption. That said, though the Core i7-3960X sports a 130W TDP, those specs don't represent too much of a leap over the Core i7-2600K and its 95W TDP.

When sitting idle, the Core i7-3960X drew 112 watts of power, the Core i7-990X drew 119 watts, and the Core i7-2600K drew 93.6 watts. That works out to a 20 percent jump over the 2600K for the 3960X--considerable, but hardly beyond the pale. The greater offender against power efficiency here is the Core i7-990X, but again this result just goes to show Sandy Bridge's improved power savings.

Power consumption while under load maintained roughly the same pattern for the three CPUs. Over the course of a strenuous workload, the Core i7-3690X used 5 percent less power than the Core i7-990X, and 24 percent more power than the Core i7-2600K.

Serious Inquiries Only

The Core i7-3960X is a worthy successor to last year's Extreme Edition processors, but the same caveats apply to it as to them. You'll see the greatest benefit in programs that are heavily threaded--computation-heavy spreadsheets, video encoding applications like Sony Vegas Pro, and 3D rendering applications like Maxon Cinema 4D, for example.

On the gaming front, whether you use Intel's excellent overclocking assistant or muck about in the BIOS, you'll be able to cobble together a nauseatingly potent performance machine. And if a $1000 CPU is within your gaming budget, you can't go wrong. This processor is poised to be the foundation of many of 2012's performance desktop juggernauts, and a few early efforts that have already trickled into the lab are sure to make waves. But if you aren't overclocking--or looking to get some gaming done--you don't need this much power.

Ivy Bridge does throw a wrench into the works. At the moment, we know very little about the platform: It'll be shrunk down to the 22nm processor, which should cut power consumption a bit.

Is Sandy Bridge E worth it? Even at $1000, the answer is a resounding yes--if you're using the right apps, are a dedicated overclocker, or have barrels of cash that you simply can't spend fast enough.by nate ralph,pc world

Monday, November 21, 2011

Logitech G9X Mouse for Gaming

Now twitching in your hands, it’s about time we told you about it’s game playing assistive abilities, and they do not disappoint.

The Dual Mode scroll wheel allows precise selection when required yet can spin like the moon if you ask it. A simple light click down alternates between the 2 modes. Hyper Scroll – Click to Click.

The Dual Mode scroll wheel allows precise selection when required yet can spin like the moon if you ask it. A simple light click down alternates between the 2 modes. Hyper Scroll – Click to Click.

For this part of the review we obviously require a game, so, one of my favourites, Call of Duty, Modern Warfare 3 is loading, and I pile straight into my favourite map, Overgrown; I notice straight away how well the mouse glides on the gaming mat, pure smooth slipper feet made from teflon are no doubt the reason, but I can tell you this, it is super silky smooth. It even works fairly well on the bare desk and my slacks so no worries there.

The mouse is as accurate as you’d expect from a G-Series rodent, with the 5700dpi sensor seemingly underwhelmed at my playing ability. At a little under 4″ x 3″ in size it is a full size mouse, with yet another plus point being the Logitech G9x’s ergonomic design, even after an hour of play I can hardly feel any strain on my wrist or fingers.

The Dual Mode scroll wheel allows precise selection when required yet can spin like the moon if you ask it. A simple light click down alternates between the 2 modes. Hyper Scroll – Click to Click.

The Dual Mode scroll wheel allows precise selection when required yet can spin like the moon if you ask it. A simple light click down alternates between the 2 modes. Hyper Scroll – Click to Click.Logitech have also quoted a polling rate of 1000hz, pretty darn quick, so absolutely no problems with communications lag, and the potential 165″ second tracking ability is not only beyond my arms, but well beyond the reach of this, and most likely any, desk.

The G9x is an Optical Laser Gaming Mouse, which means it uses a Laser and Sensor that read the surface the mouse is gliding across, with a superior 5700dpi engine the accuracy is stunning and a relative maximum acceleration of 30g is enough to make sure the pointer sits exactly where you ask it too. It’s Windows XP, Vista and 7 Ready, Plug and Play if you feel like doing without the SetPoint software, which I would personally recommend.

It’s Windows XP, Vista and 7 Ready, Plug and Play if you feel like doing without the SetPoint software, which I would personally recommend.

It’s Windows XP, Vista and 7 Ready, Plug and Play if you feel like doing without the SetPoint software, which I would personally recommend.

It’s Windows XP, Vista and 7 Ready, Plug and Play if you feel like doing without the SetPoint software, which I would personally recommend.In conclusion, the Logitech G9x Gaming Mouse is without doubt an excellent piece of Kit, capable of not least impressing your friends but also making your gaming experiences that bit more enjoyable.

Tuesday, November 15, 2011

Best 24 Inch Monitor ASUS ML248H 1920x1080 LED Monitor

One of our favorite monitors of the year is the ML248H. This inexpensive and ultra-thin LED gaming monitor has a 2ms response time, is full 1080p in 1920x1080 resolution and and includes a HDMI connector input for easy connectivity.

The ML248H also comes with a ring stand that allows the monitor to easily tilt and swivel in any direction that you see fit. While this monitor has been on the bestselling list for the first part of the year, recently the Viewsonic VX2453MH-LED, the 24 inch version of the monitor reviewed above, has taken it's place.

The ML248H also comes with a ring stand that allows the monitor to easily tilt and swivel in any direction that you see fit. While this monitor has been on the bestselling list for the first part of the year, recently the Viewsonic VX2453MH-LED, the 24 inch version of the monitor reviewed above, has taken it's place.

Product Details: 2ms response time, full hd 1920x1080 resolution. Ring stand that allows full tilt and swivel. Save money with low power consumption with LED backlighting (less than 30 watts). Includes a 3 year parts and labor warranty.

The ML248H also comes with a ring stand that allows the monitor to easily tilt and swivel in any direction that you see fit. While this monitor has been on the bestselling list for the first part of the year, recently the Viewsonic VX2453MH-LED, the 24 inch version of the monitor reviewed above, has taken it's place.

The ML248H also comes with a ring stand that allows the monitor to easily tilt and swivel in any direction that you see fit. While this monitor has been on the bestselling list for the first part of the year, recently the Viewsonic VX2453MH-LED, the 24 inch version of the monitor reviewed above, has taken it's place.Product Details: 2ms response time, full hd 1920x1080 resolution. Ring stand that allows full tilt and swivel. Save money with low power consumption with LED backlighting (less than 30 watts). Includes a 3 year parts and labor warranty.

Sunday, November 13, 2011

Logitech Z906 Surround-Sound Computer speakers

Best surround-sound computer speakers

Pros

- Sturdy speakers

- Easy to set up and use

- Good surround sound for games and movies

- Multichannel analog inputs

Cons

- Not as great for playing music in one test

- No HDMI connectivity

- No HD audio decoding

- Home theater speakers sound better

Reviews say surround-sound computer speakers can't match the sound quality of a dedicated home theater system, but the Logitech Z906 does a good job bringing home theater-like experience to your desktop. This 5.1 system (five speakers plus subwoofer) works especially well for movies and games, with bass that's punchy but that doesn't drown out everything else. TrustedReviews.com says the Z906 delivers rich-sounding music, too, although MaximumPC magazine's tester detects harsh, brittle highs with pianos and vocals. Several experts and owners are disappointed that Logitech didn't include any HDMI ports or the ability to decode Dolby TrueHD and DTS-HD Master Audio, both of which would have made it better to use with Blu-ray players and game consoles. It does, however, have multichannel analog inputs for those devices, including some Blu-ray players, that have those ports. If you're not dead-set on surround sound, reviews recommend several PC speaker sets with fewer speakers but better sound quality

Saturday, November 12, 2011

ATI Crossfire Explained

ATI CrossFire technology, which works similar to NVIDIA's SLI. Just like SLI, CrossFire uses multiple Graphics Processing Units (GPUs) to improve 3D graphics performance. In this article we take a look at the different generations of CrossFire and the different modes of this great technology.

First CrossFire Cards

When ATI CrossFire first came out it worked like this: You needed a CrossFire compatible motherboard, a 'master' CrossFire Edition video card, and another regular 'slave' video card. The master video card contains a compositing engine that combines the two GPU outputs and displays it to the screen.

The master and slave video cards did not have to be the same, but they must be of the same GPU family. The two video cards are connected together via a Y-dongle which connects into each card's DVI port.

Native CrossFire

The next generation of CrossFire did not require a master card. You just needed two PCI-Express video cards with CrossFire capability, and the dongle connection that was present in the first generation was removed and a bridge was used to connect the two video cards (similar to NVIDIA SLI).

CrossFireX

Part of the AMD spider platform is the recently released CrossFireX which allows you to connect four video cards for fast gaming performance.

Different CrossFire Modes

ATI CrossFire can be set to function in different modes.

First CrossFire Cards

When ATI CrossFire first came out it worked like this: You needed a CrossFire compatible motherboard, a 'master' CrossFire Edition video card, and another regular 'slave' video card. The master video card contains a compositing engine that combines the two GPU outputs and displays it to the screen.

The master and slave video cards did not have to be the same, but they must be of the same GPU family. The two video cards are connected together via a Y-dongle which connects into each card's DVI port.

Native CrossFire

The next generation of CrossFire did not require a master card. You just needed two PCI-Express video cards with CrossFire capability, and the dongle connection that was present in the first generation was removed and a bridge was used to connect the two video cards (similar to NVIDIA SLI).

CrossFireX

Part of the AMD spider platform is the recently released CrossFireX which allows you to connect four video cards for fast gaming performance.

Different CrossFire Modes

ATI CrossFire can be set to function in different modes.

- Alternate Frame Rendering - Just like with SLI's Alternate Frame Rendering mode, each frame is rendered by each GPU one after the other. For example, the first GPU will render all the even frames while the second GPU will render all the odd frames.

- Supertiling Mode - Supertiling works by dividing the screen/frame into small squares of pixels and spreading them evenly to each GPU for processing.

- Scissor Mode - In scissor mode the frame is split up into two pieces, depending on rendering effort. The two GPUs are given each half of the frame for processing.

- Antialiasing - Same as the SLI antialiasing mode, this increases the quality of the image instead of the rendering speed. You can enable up to 14x of antialiasing.

- by buid-gaming-computers.com

Technology Behind NVIDIA SLI

What is NVIDIA SLI Technology?NVIDIA® SLI™ technology is a revolutionary platform innovation that allows you to intelligently scale graphics performance by combining multiple NVIDIA graphics solutions in an SLI-Certified motherboard.

Performance Chart:

How Does SLI Technology Work?

Using proprietary software algorithms and dedicated scalability logic in each NVIDIA graphics processing unit (GPU), NVIDIA SLI technology delivers up to twice the performance (with 2 cards) and 2.8X the performance (with 3 cards) compared to a single graphics solution.

How Do I Get SLI?

To run SLI, a PC needs to be equipped with an SLI-Certified Motherboard and 2or 3 SLI-Certified GeForce GPUs. We also recommend using an SLI-Certified power supply and chassis for maximum stability. For a list of certified products, click here.

Why is an SLI-Ready PC an Intelligent Investment? No other PC component upgrade can offer even close to the gaming performance boost gained from adding a second graphics card.

Performance Chart:

How Does SLI Technology Work?

Using proprietary software algorithms and dedicated scalability logic in each NVIDIA graphics processing unit (GPU), NVIDIA SLI technology delivers up to twice the performance (with 2 cards) and 2.8X the performance (with 3 cards) compared to a single graphics solution.

How Do I Get SLI?

To run SLI, a PC needs to be equipped with an SLI-Certified Motherboard and 2or 3 SLI-Certified GeForce GPUs. We also recommend using an SLI-Certified power supply and chassis for maximum stability. For a list of certified products, click here.

Why is an SLI-Ready PC an Intelligent Investment? No other PC component upgrade can offer even close to the gaming performance boost gained from adding a second graphics card.

Friday, November 11, 2011

Review Of My Cpu intel Core i7 980x Extreme Edition

Intel’s PC-processor competitor, AMD, has done well with its budget and mainstream CPUs, with products like the Athlon II X4 635 eking out a performance edge over Intel’s comparably priced Core i3-530. But at the high end of the CPU world, where absolute performance matters much more than consumer-friendly pricing, Intel is still the undisputed king. Its current Core i7 CPUs top anything AMD currently has on offer.

That continues with the latest Core i7 chip, the Core i7-980X Extreme Edition (code-named "Gulftown" during its development), which packs six cores and can handle up to 12 simultaneous processing threads. Intel has taken the next logical step in chip architecture by increasing the number of cores, and in doing so, the company has delivered another “world’s fastest CPU." Indeed, in programs that are fully threaded (that is, written to take full advantage of as many cores and threads as are available), the Core i7-980X is substantially faster than the previous CPU champ, the Core i7-975 Extreme Edition. But given the still-limited selection of software that is fully optimized to take advantage of multiple cores (most of them professional content-creation apps, media transcoders, and a few games), not to mention the stratospheric $1,000 price, this CPU is all kinds of overkill for the average user.

Still, if you are a professional content creator who edits loads of HD video, large image files, or 3D models, upgrading to the Core i7-980X may be a smart choice, even if you already have a Core i7-975. (Note: If you're dropping this CPU into an existing Socket 1366 motherboard, you'll have to update your BIOS before installing the CPU; otherwise, the system may not boot.) In our video-editing and -encoding tests, the Core i7-980X was 25 percent to 40 percent faster than the Core i7-975, depending on the program. That kind of performance boost could shave hours off rendering times when working with large files. But with software that isn’t multi-core-aware, like iTunes, the Core i7-980X’s performance is dead-even with its predecessor's, due to the fact that the two CPUs share the same 3.33GHz clock speed.

The similarities between the Core i7-980X and the Core i7-975 don’t end with clock speed. Both chips use the same CPU socket (LGA 1366, reserved for Intel's high-end Core i7 chips) and motherboard chipset (the Intel X58). And both CPUs are equipped with the same base features. Hyper-Threading allows fully threaded software to simultaneously perform two processing threads per core, for a grand total of 12 threads on the Core i7-980X, versus "just" eight threads with the Core i7-975. (The latter has four physical cores, to the Core i7-980X’s six.) Additionally, both CPUs feature Intel’s Turbo Boost technology, which helps boost performance in software that isn’t written for multiple cores by switching off unused cores and, within the chip's thermal parameters, automatically cranking up the CPU clock speed.

Considering the similarities and differences between these two CPUs, the Core i7-980X performed about as expected in testing. It pulled well ahead of the Core i7-975 in programs that take full advantage of extra cores, but it stayed neck-and-neck with the Core i7-975 elsewhere, since both CPUs share the same base clock speed. In all tests, AMD’s current flagship chip, the Phenom II X4 965, lagged far behind. (It should be noted, though, that at around $190 at the time we wrote this, that AMD Phenom CPU costs less than a fifth of the price of either of these Intel chips.)

We started our workout with our Sony Vegas 8 test, which is designed specifically to tax all CPU cores. The Core i7-980X finished our standard MPEG-2 rendering trial in Vegas in just 1 minute and 40 seconds, while the Core i7-975 took 2:13 to tackle that task and AMD’s Phenom II X4 965 took 3:12. We then moved on to Cinebench 10, another test that taxes multiple cores. The Core i7-980X scored a stunning 26,981, while the Core i7-975 came in at 19,973, and AMD's Phenom II X4 965 managed just 10,454. That's sheer processing muscle if you're using the right apps.

The results for the Core i7-980X were even more impressive in our next test, our Windows Media Encoder video-conversion trial, which also takes advantage of multiple cores. It took just 1 minute and 40 seconds to convert our standard test file, while the AMD chip took 2:53, and Intel's Core i7-975 took 3:01. Clearly, if editing or transcoding large files is something you do often, the Core i7-980X will save you some serious time.

On the other hand, our iTunes 7 conversion test didn’t show such disparate results. This reveals the real-world limitations of these high-end processors when tackling everyday computing tasks using software that isn't multi-core-capable. The Core i7-980X converted our 11 test tracks in 2 minutes and 26 seconds; the Core i7-975 actually did so one second faster (well within the margin of error); and AMD’s Phenom II X4 965 came in 21 seconds behind, at 2:47.

In every test other than iTunes, in which it essentially tied its identically clocked quad-core predecessor, the Core i7-980X set new records. And thanks to a 50 percent increase in processing cores, it pulled well ahead in those tests that take full advantage of all available processing power. This CPU is extremely fast, no doubt, but unless you’re a content-creation professional who taps toes and twiddles thumbs every day while waiting for projects to render, there’s no reason that anyone who owns an earlier Core i7 chip should feel strongly compelled to upgrade at current prices.

Gamers will also see some benefit in titles that take advantage of multiple cores. (Where applicable, the extra processing threads often allow the game to handle more-complex artificial intelligence for non-player characters.) But again, while a handful of games can make use of these extra cores, and more are on the way, most games currently on the market won't see a significant benefit here if you're already running a fast quad-core CPU. Gamers looking for bragging rights will certainly get them by adding this CPU to their systems, but adding a second (or third) graphics card, if the PC can handle it, will likely result in a more noticeable benefit across more games.

It’s also worth noting that, like the previous Core i7 Extreme Edition CPU (and AMD’s Black Edition CPUs), the Core i7-980X features an unlocked multiplier for easy overclocking. To get a sense of the CPU's overclocking potential, check out our review of Falcon Northwest's latest Mach V desktop, outfitted with an overclocked Core i7-980X. With the help of liquid cooling, Falcon pushed the CPU to a bit above 4GHz, and in our tests, the PC was quite stable.

In sum: The 980X is worth the asking price for creative professionals dealing in large files; for everyone else, it’s overkill, at least from a practical perspective. Still, there’s no denying the geek cred that comes with having a CPU that can handle 12 processes at once—just because.by matt safford

That continues with the latest Core i7 chip, the Core i7-980X Extreme Edition (code-named "Gulftown" during its development), which packs six cores and can handle up to 12 simultaneous processing threads. Intel has taken the next logical step in chip architecture by increasing the number of cores, and in doing so, the company has delivered another “world’s fastest CPU." Indeed, in programs that are fully threaded (that is, written to take full advantage of as many cores and threads as are available), the Core i7-980X is substantially faster than the previous CPU champ, the Core i7-975 Extreme Edition. But given the still-limited selection of software that is fully optimized to take advantage of multiple cores (most of them professional content-creation apps, media transcoders, and a few games), not to mention the stratospheric $1,000 price, this CPU is all kinds of overkill for the average user.

A detailed, labeled schematic of the Core i7-980X die, with its six cores. The massive 12MB of L3 cache (which is shared across all cores) takes up much of the space.

Still, if you are a professional content creator who edits loads of HD video, large image files, or 3D models, upgrading to the Core i7-980X may be a smart choice, even if you already have a Core i7-975. (Note: If you're dropping this CPU into an existing Socket 1366 motherboard, you'll have to update your BIOS before installing the CPU; otherwise, the system may not boot.) In our video-editing and -encoding tests, the Core i7-980X was 25 percent to 40 percent faster than the Core i7-975, depending on the program. That kind of performance boost could shave hours off rendering times when working with large files. But with software that isn’t multi-core-aware, like iTunes, the Core i7-980X’s performance is dead-even with its predecessor's, due to the fact that the two CPUs share the same 3.33GHz clock speed.

The similarities between the Core i7-980X and the Core i7-975 don’t end with clock speed. Both chips use the same CPU socket (LGA 1366, reserved for Intel's high-end Core i7 chips) and motherboard chipset (the Intel X58). And both CPUs are equipped with the same base features. Hyper-Threading allows fully threaded software to simultaneously perform two processing threads per core, for a grand total of 12 threads on the Core i7-980X, versus "just" eight threads with the Core i7-975. (The latter has four physical cores, to the Core i7-980X’s six.) Additionally, both CPUs feature Intel’s Turbo Boost technology, which helps boost performance in software that isn’t written for multiple cores by switching off unused cores and, within the chip's thermal parameters, automatically cranking up the CPU clock speed.

Considering the similarities and differences between these two CPUs, the Core i7-980X performed about as expected in testing. It pulled well ahead of the Core i7-975 in programs that take full advantage of extra cores, but it stayed neck-and-neck with the Core i7-975 elsewhere, since both CPUs share the same base clock speed. In all tests, AMD’s current flagship chip, the Phenom II X4 965, lagged far behind. (It should be noted, though, that at around $190 at the time we wrote this, that AMD Phenom CPU costs less than a fifth of the price of either of these Intel chips.)

We started our workout with our Sony Vegas 8 test, which is designed specifically to tax all CPU cores. The Core i7-980X finished our standard MPEG-2 rendering trial in Vegas in just 1 minute and 40 seconds, while the Core i7-975 took 2:13 to tackle that task and AMD’s Phenom II X4 965 took 3:12. We then moved on to Cinebench 10, another test that taxes multiple cores. The Core i7-980X scored a stunning 26,981, while the Core i7-975 came in at 19,973, and AMD's Phenom II X4 965 managed just 10,454. That's sheer processing muscle if you're using the right apps.

The results for the Core i7-980X were even more impressive in our next test, our Windows Media Encoder video-conversion trial, which also takes advantage of multiple cores. It took just 1 minute and 40 seconds to convert our standard test file, while the AMD chip took 2:53, and Intel's Core i7-975 took 3:01. Clearly, if editing or transcoding large files is something you do often, the Core i7-980X will save you some serious time.

On the other hand, our iTunes 7 conversion test didn’t show such disparate results. This reveals the real-world limitations of these high-end processors when tackling everyday computing tasks using software that isn't multi-core-capable. The Core i7-980X converted our 11 test tracks in 2 minutes and 26 seconds; the Core i7-975 actually did so one second faster (well within the margin of error); and AMD’s Phenom II X4 965 came in 21 seconds behind, at 2:47.

In every test other than iTunes, in which it essentially tied its identically clocked quad-core predecessor, the Core i7-980X set new records. And thanks to a 50 percent increase in processing cores, it pulled well ahead in those tests that take full advantage of all available processing power. This CPU is extremely fast, no doubt, but unless you’re a content-creation professional who taps toes and twiddles thumbs every day while waiting for projects to render, there’s no reason that anyone who owns an earlier Core i7 chip should feel strongly compelled to upgrade at current prices.

Gamers will also see some benefit in titles that take advantage of multiple cores. (Where applicable, the extra processing threads often allow the game to handle more-complex artificial intelligence for non-player characters.) But again, while a handful of games can make use of these extra cores, and more are on the way, most games currently on the market won't see a significant benefit here if you're already running a fast quad-core CPU. Gamers looking for bragging rights will certainly get them by adding this CPU to their systems, but adding a second (or third) graphics card, if the PC can handle it, will likely result in a more noticeable benefit across more games.

It’s also worth noting that, like the previous Core i7 Extreme Edition CPU (and AMD’s Black Edition CPUs), the Core i7-980X features an unlocked multiplier for easy overclocking. To get a sense of the CPU's overclocking potential, check out our review of Falcon Northwest's latest Mach V desktop, outfitted with an overclocked Core i7-980X. With the help of liquid cooling, Falcon pushed the CPU to a bit above 4GHz, and in our tests, the PC was quite stable.

In sum: The 980X is worth the asking price for creative professionals dealing in large files; for everyone else, it’s overkill, at least from a practical perspective. Still, there’s no denying the geek cred that comes with having a CPU that can handle 12 processes at once—just because.by matt safford

Thursday, November 10, 2011

Intel Haswell Emerge: 2-4 Cores,New Graphics Core,DDR3,Low Power

The very first details about the actual microprocessors based on code-named Haswell micro-architecture for mainstream desktops and notebooks have emerged on the Internet. Instead of increasing the number of cores inside its microprocessors, Intel Corp. will continue to improve efficiency to boost performance amid aggressive lowering of power consumption of chips.

Intel Haswell microprocessors for mainstream desktops and laptops will be structurally similar to existing Core i-series "Sandy Bridge" and "Ivy Bridge" chips and will continue to have two or four cores with Hyper-Threading technology along with graphics adapter that shares last level cache (LLC) with processing cores and works with memory controller via system agent, according to a slide (which resembles those from Intel) published by ChipHell web-site. On the micro-architectural level the chip will be a lot different: its x86 cores will be based on the brand new Haswell micro-architecture and its graphics engine based on Denlow architecture will support such new features as DirectX 11.1, OpenGL 3.2+ and so on.

The processors that belong to the Haswell generation will continue to rely on dual-channel DDR3/DDR3L memory controller with DDR power gating support to trim idle power consumption. The chip will have three PCI Express 3.0 controllers, Intel Turbo Boost technology with further improvements, power aware interrupt routing for power/performance optimizations and other improvements. What is important is that Haswell-generation chips will sport new form-factors, including LGA 1150 for desktops as well as rPGA and BGA for laptops.

The new processors for mobile applications will continue to have thermal design power between 15W and 57W (15W, 37W, 47W and 57W) for ultra low-voltage and extreme edition models, respectively; while desktop chips will have TDP in the range between 35W and 95W, just like today. However, in a bid to open the doors to various new form-factors, such as ultrabooks, Intel implemented a number of aggressive measures to trim power consumption further even from the levels of Ivy Bridge, including power aware interrupt routing for power/performance optimizations, configurable TDP and LPM, DDR power gating, power optimizer (CPPM) support, idle power improvements, latest power states, etc.

The new processors for mobile applications will continue to have thermal design power between 15W and 57W (15W, 37W, 47W and 57W) for ultra low-voltage and extreme edition models, respectively; while desktop chips will have TDP in the range between 35W and 95W, just like today. However, in a bid to open the doors to various new form-factors, such as ultrabooks, Intel implemented a number of aggressive measures to trim power consumption further even from the levels of Ivy Bridge, including power aware interrupt routing for power/performance optimizations, configurable TDP and LPM, DDR power gating, power optimizer (CPPM) support, idle power improvements, latest power states, etc.

The most important improvements of Haswell are on the level of x86 core micro-architecture. It is believed that the new MA will be substantially different from current Nehalem/Sandy Bridge generations, which will enable further scalability and performance increases. Besides, Haswell will support numerous new instructions, including AVX2, bit manipulation instructions, FPMA (floating point multiple accumulate) and others. Denlow graphics core of Haswell will also sport substantially boosted performance and will also be certified to run many professional applications.

By anton shilov

Intel Haswell microprocessors for mainstream desktops and laptops will be structurally similar to existing Core i-series "Sandy Bridge" and "Ivy Bridge" chips and will continue to have two or four cores with Hyper-Threading technology along with graphics adapter that shares last level cache (LLC) with processing cores and works with memory controller via system agent, according to a slide (which resembles those from Intel) published by ChipHell web-site. On the micro-architectural level the chip will be a lot different: its x86 cores will be based on the brand new Haswell micro-architecture and its graphics engine based on Denlow architecture will support such new features as DirectX 11.1, OpenGL 3.2+ and so on.

The processors that belong to the Haswell generation will continue to rely on dual-channel DDR3/DDR3L memory controller with DDR power gating support to trim idle power consumption. The chip will have three PCI Express 3.0 controllers, Intel Turbo Boost technology with further improvements, power aware interrupt routing for power/performance optimizations and other improvements. What is important is that Haswell-generation chips will sport new form-factors, including LGA 1150 for desktops as well as rPGA and BGA for laptops.

The most important improvements of Haswell are on the level of x86 core micro-architecture. It is believed that the new MA will be substantially different from current Nehalem/Sandy Bridge generations, which will enable further scalability and performance increases. Besides, Haswell will support numerous new instructions, including AVX2, bit manipulation instructions, FPMA (floating point multiple accumulate) and others. Denlow graphics core of Haswell will also sport substantially boosted performance and will also be certified to run many professional applications.

By anton shilov

On Board Sound vs Dedicated Card

Onboard Sound Vs. Add in Sound Card

This is one of the most prevalent questions asked in this forum. I figured I would put all the info in this thread so members can find it quickly and easily. Everytime the questions is asked it will fuel a debate always ending with the same conclusion.

Why should I get a soundcard when my Motherboard has onboard sound?

I would say onboard sound is basically a marketing tactic used by motherboard manufacturers to sell an all-in-one solution. These soundchips undoubtedly have come along way since the first onboards, and would be fine for a basic office or home PC, but they still do not stand up to a quality add-in PCI card for many reasons.

Onboard sound chips need to use CPU cycles to process sound. This robs your sytem of performance. If your sound chip has EAX which alot of them do these days the issue is compounded usually degrading your performance somewhere in the area of 5-15 FPS in games. This is usually the reported number of FPS that users report getting when then install a PCI sound solution. This is not the worst issue though.

But my onboard sounds fine to me?

Of course if a user has been using their soundchips for quite a while, the ear gets used to the less than stellar sound quality boasted by onboard sound chips. When a user installs a PCI solution they are literally blown away by the sound quality. They report hearing things in games and music that previously was not noticable. Once a user is used to a PCI sound card they can hear the deficiencies inherent to onboard sound chips and will never use one again. They sound very cold and sterile. DSP effects are usually overwhelming and washed out. These chips have no qualities usually associated with good sound, so alot of times the user will not know what they are missing until they hear it. You will need a set of decent speakers to be able to hear the difference however. $10 speakers won't cut it.

Now over the years various companies have released onboard sound chips that have tried to get over these issues. Connecting to the bus in different ways so they don't use CPU cycles etc. In terms of sound quality they are still lacking. All in all that's what we all want, I could care less about other things as long as I have great sound quality. People spend so much time building up there video subsystems, studying and reading about the best video components, but then they have an older onboard sound card connected to small multimedia speakers. Very sad indeed. If they invested half the time researching audio as they spent on video they wouldn't be using onboard sound. They would be using at least an entry level soundcard with surround sound speakers. Modern games are productional masterpieces with equal attention paid to audio as well as video. Without this hardware you're only getting half of what the game developers wanted you to experience. Sound is definitely an important part of all modern games. Indeed most new games contain EAX, surround sound support and directional audio, all used to make your game more enjoyable. Unfortunately all are limited by the sound hardware you have.

By ROBSCIX

This is one of the most prevalent questions asked in this forum. I figured I would put all the info in this thread so members can find it quickly and easily. Everytime the questions is asked it will fuel a debate always ending with the same conclusion.

Why should I get a soundcard when my Motherboard has onboard sound?

I would say onboard sound is basically a marketing tactic used by motherboard manufacturers to sell an all-in-one solution. These soundchips undoubtedly have come along way since the first onboards, and would be fine for a basic office or home PC, but they still do not stand up to a quality add-in PCI card for many reasons.

Onboard sound chips need to use CPU cycles to process sound. This robs your sytem of performance. If your sound chip has EAX which alot of them do these days the issue is compounded usually degrading your performance somewhere in the area of 5-15 FPS in games. This is usually the reported number of FPS that users report getting when then install a PCI sound solution. This is not the worst issue though.

But my onboard sounds fine to me?

Of course if a user has been using their soundchips for quite a while, the ear gets used to the less than stellar sound quality boasted by onboard sound chips. When a user installs a PCI solution they are literally blown away by the sound quality. They report hearing things in games and music that previously was not noticable. Once a user is used to a PCI sound card they can hear the deficiencies inherent to onboard sound chips and will never use one again. They sound very cold and sterile. DSP effects are usually overwhelming and washed out. These chips have no qualities usually associated with good sound, so alot of times the user will not know what they are missing until they hear it. You will need a set of decent speakers to be able to hear the difference however. $10 speakers won't cut it.

Now over the years various companies have released onboard sound chips that have tried to get over these issues. Connecting to the bus in different ways so they don't use CPU cycles etc. In terms of sound quality they are still lacking. All in all that's what we all want, I could care less about other things as long as I have great sound quality. People spend so much time building up there video subsystems, studying and reading about the best video components, but then they have an older onboard sound card connected to small multimedia speakers. Very sad indeed. If they invested half the time researching audio as they spent on video they wouldn't be using onboard sound. They would be using at least an entry level soundcard with surround sound speakers. Modern games are productional masterpieces with equal attention paid to audio as well as video. Without this hardware you're only getting half of what the game developers wanted you to experience. Sound is definitely an important part of all modern games. Indeed most new games contain EAX, surround sound support and directional audio, all used to make your game more enjoyable. Unfortunately all are limited by the sound hardware you have.

By ROBSCIX

Wednesday, November 9, 2011

When Can We Expect DDR4 Arrival?

Can you believe DDR3 has been present in home PC systems for four years already? It still has another one year as king of the hill before DDR4 will be introduced, and the industry currently isn’t expecting volume shipments of DDR4 until 2015.

JEDEC isn’t due to confirm the DDR4 standard until next year, but following on from the recent MemCon Tokyo 2010, Japanese website PC Watch has combined the roadmaps of several memory companies on what they expect DDR4 to offer.

Frequency abound! Voltage drops! But power still increases?

It looks like we should expect frequencies introduced at 2,133MHz and it will scale to over 4.2GHz with DDR4. 1,600MHz (10ns) could still well be the base spec for sever DIMMs that require reliability, but it’s expected that JEDEC will create new standard DDR3 frequency specifications all the way up to 2,133MHz, which is where DDR4 should jump off.

As the prefetch per clock should extend to 16 bits (up from 8 bits in DDR3), this means the internal cell frequency only has to scale the same as DDR2 and DDR3 in order to achieve the 4+GHz target.

The downside of frequency scaling is that voltage isn’t dropping fast enough and the power consumption is increasing relative to PC-133. DDR4 at 4.2GHz and 1.2V actually uses 4x the power of SDRAM at 133MHz at 3.3V. 1.1V and 1.05V are currently being discussed, which brings the power down to just over 3x, but it depends on the quality of future manufacturing nodes – an unknown factor.

While 4.2GHz at 1.2V might require 4x the power it’s also a 2.75x drop in voltage for a 32 fold increase in frequency: that seems like a very worthy trade off to us – put that against the evolution of power use in graphics cards for a comparison and it looks very favourable.

One area where this design might cause problems is enterprise computing. If you’re using a lot of DIMMs, considerably higher power, higher heat and higher cost aren’t exactly attractive. It’s unlikely that DDR4 4.2GHz will reach a server rack near you though: remember most servers today are only using 1,066MHz DDR3 whereas enthusiast PC memory now exceeds twice that.

Server technology will be slightly different and use high performance digital switches to add additional DIMM slots per channel (much like PCI-Express switches we expect, but with some form of error prevention), and we expect it to be used with the latest buffered LR-DIMM technology as well, although the underlying DDR4 topology will remain the same.

This is the same process the PCI bus went through in its transition to PCI-Express: replacing anything parallel nature with a serial approach. DDR4 will become a point-to-point bus and the parallelism is being left with the memory controller itself with multiple memory channels.

If we look at Intel’s upcoming LGA2011 socket that is anticipated to use a quad-channel memory interface and a single DIMM per channel, it’s now quite obvious that future CPUs using this socket stand a good chance of using DDR4, especially as LGA1366 has had a well defined three year lifespan. In the same timeframe DDR4 could see considerable market acceptance so it’s a smart move by Intel.

The big questions remain to be answered then: is it a consumer (cost) friendly process and how well does TSV cope with overclocking? We’ll have to wait for the first samples in 2011-2012 to find out.

JEDEC isn’t due to confirm the DDR4 standard until next year, but following on from the recent MemCon Tokyo 2010, Japanese website PC Watch has combined the roadmaps of several memory companies on what they expect DDR4 to offer.

Frequency abound! Voltage drops! But power still increases?

It looks like we should expect frequencies introduced at 2,133MHz and it will scale to over 4.2GHz with DDR4. 1,600MHz (10ns) could still well be the base spec for sever DIMMs that require reliability, but it’s expected that JEDEC will create new standard DDR3 frequency specifications all the way up to 2,133MHz, which is where DDR4 should jump off.

As the prefetch per clock should extend to 16 bits (up from 8 bits in DDR3), this means the internal cell frequency only has to scale the same as DDR2 and DDR3 in order to achieve the 4+GHz target.

The downside of frequency scaling is that voltage isn’t dropping fast enough and the power consumption is increasing relative to PC-133. DDR4 at 4.2GHz and 1.2V actually uses 4x the power of SDRAM at 133MHz at 3.3V. 1.1V and 1.05V are currently being discussed, which brings the power down to just over 3x, but it depends on the quality of future manufacturing nodes – an unknown factor.

While 4.2GHz at 1.2V might require 4x the power it’s also a 2.75x drop in voltage for a 32 fold increase in frequency: that seems like a very worthy trade off to us – put that against the evolution of power use in graphics cards for a comparison and it looks very favourable.

One area where this design might cause problems is enterprise computing. If you’re using a lot of DIMMs, considerably higher power, higher heat and higher cost aren’t exactly attractive. It’s unlikely that DDR4 4.2GHz will reach a server rack near you though: remember most servers today are only using 1,066MHz DDR3 whereas enthusiast PC memory now exceeds twice that.

Server technology will be slightly different and use high performance digital switches to add additional DIMM slots per channel (much like PCI-Express switches we expect, but with some form of error prevention), and we expect it to be used with the latest buffered LR-DIMM technology as well, although the underlying DDR4 topology will remain the same.